About

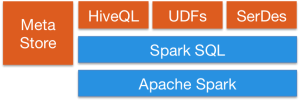

Hive is the default Spark catalog.

Since Spark 2.0, Spark SQL supports builtin Hive features such as:

See:

Articles Related

Enable

- The SparkSession must be instantiated with Hive support

String warehouseLocation = new File("spark-warehouse").getAbsolutePath();

SparkSession spark = SparkSession

.builder()

.appName("Java Spark Hive Example")

.config("spark.sql.warehouse.dir", warehouseLocation)

.enableHiveSupport() // Hive support

.getOrCreate();

Default

Users who do not have an existing Hive deployment can still enable Hive support.

When not configured by the hive-site.xml, the context automatically:

- creates metastore_db in the current directory

- creates a directory configured by spark.sql.warehouse.dir, which defaults to the directory spark-warehouse in the current directory that the Spark application is started.

Management

Configuration

Dependency

Since Hive has a large number of dependencies, these dependencies are not included in the default Spark distribution.

On all of the worker nodes, the following must be installed on the classpath:

- Hive dependencies

File

Configuration of Hive is done by placing:

- core-site.xml

- and hdfs-site.xml files

in:

- the conf directory of spark. SPARK_HOME/conf/

- of Hadoop

Options

Server

Metastore

Example of configuration file for a local installation in a test environment.

<configuration>

<property>

<name>hive.exec.scratchdir</name>

<value>C:\spark-2.2.0-metastore\scratchdir</value>

<description>Scratch space for Hive jobs</description>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>C:\spark-2.2.0-metastore\spark-warehouse</value>

<description>Spark Warehouse</description>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:derby:c:/spark-2.2.0-metastore/metastore_db;create=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>org.apache.derby.jdbc.EmbeddedDriver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

</configuration>

Database

The default metastore is created with Derby if not set.

Warehouse

Search Algorithm:

- If the setting value of spark.sql.warehouse.dir is not null, spark.sql.warehouse.dir

- If the setting value of hive.metastore.warehouse.dir is not null, hive.metastore.warehouse.dir

- otherwise workingDir/spark-warehouse/

The doc said that the hive.metastore.warehouse.dir property in hive-site.xml is deprecated since Spark 2.0.0.

Example of log output:

18/07/01 00:10:50 INFO SharedState: spark.sql.warehouse.dir is not set, but hive.metastore.warehouse.dir is set. Setting spark.sql.warehouse.dir to the value of hive.metastore.warehouse.dir ('C:\spark-2.2.0-metastore\spark-warehouse').

18/07/01 00:10:50 INFO SharedState: Warehouse path is 'C:\spark-2.2.0-metastore\spark-warehouse'.

Normally, it will be found in the hive-site.xml file but you can change it via code:

Path warehouseLocation = Paths.get("target","spark-warehouse");

SparkSession spark = SparkSession

.builder()

.appName("Java Spark Hive Example")

.config("spark.sql.warehouse.dir", warehouseLocation.toAbsolutePath().toString())

.enableHiveSupport()

.getOrCreate();

Table

From saving-to-persistent-tables

DataFrames can also be saved as persistent tables into Hive metastore using the saveAsTable command.

A DataFrame for a persistent table can be created by calling the table method on a SparkSession with the name of the table.

Internal

Starting from Spark 2.1, persistent datasource tables have per-partition metadata stored in the Hive metastore.

Partition Hive DDLs are supported

ALTER TABLE PARTITION ... SET LOCATION

External

For an external table, you can specify the table path via the path option

df.write.option("path", "/some/path").saveAsTable("t")

The partition information is not gathered by default when creating external datasource tables (those with a path option). To sync the partition information in the metastore, you can invoke MSCK REPAIR TABLE.