About

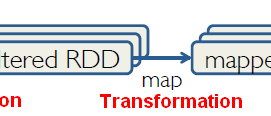

The map implementation in Spark of map reduce.

- map(func) returns a new distributed data set that's formed by passing each element of the source through a function.

Articles Related

Example

Multiplication

- PySpark: lambda function

rdd = sc.parallelize([1,2,3,4])

rdd.map(lambda x: x * 2).collect()

[2,4,6,8]

- def function

rdd = sc.parallelize([1,2,3,4])

def plus5(x):

return x+5

rdd.map(plus5).collect()

[6, 7, 8, 9]

flatMap

On 1 list

rdd = sc.parallelize([1,2,3])

rdd.flatMap(lambda x:[x,x+5]).collect()

[1,6,2,7,3,8]

On a list of list

sc.parallelize([[1,2],[3,4]]).flatMap(lambda x:x).collect()

[1, 2, 3, 4]

Key Value

key value (tuple) transformation are supported

from operator import add

wordCounts = sc.parallelize([('rat', 2), ('elephant', 1), ('cat', 2)])

totalCount = (wordCounts

.map(lambda (x,y): y)

.reduce(add))

average = totalCount / float(len(wordCounts.collect()))

print totalCount

print round(average, 2)

5

1.67