Spark - Java API

Management

Dependency

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>2.2.1</version><!--2.3.0-->

</dependency>

In addition, if you wish to access an HDFS cluster, you need to add a dependency on hadoop-client for your version of HDFS.

groupId = org.apache.hadoop

artifactId = hadoop-client

version = <your-hdfs-version>

Submit

- Local

spark-submit --class "packageToThe.Main" --master local ./pathTo/my.jar

- Master

spark-submit --class "packageToThe.Main" --master yarn --deploy-mode client ./pathTo/my.jar

Example: Run application locally on 8 cores

./bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master local[8] \

/path/to/examples.jar \

100

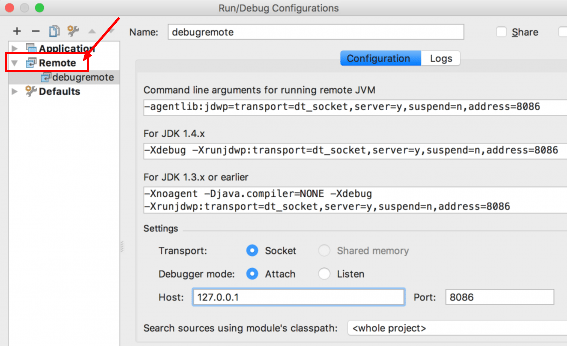

Remote Debug

Remotely

- On the machine where the spark-submit is run to attach a debugger at port 8086

export SPARK_JAVA_OPTS=-agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=8086

- Idea

Note

No need to install Spark locally … Below is the output of a wordCount app.

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

18/06/04 13:44:39 INFO SparkContext: Running Spark version 2.2.1

18/06/04 13:44:40 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

18/06/04 13:44:40 INFO SparkContext: Submitted application: Word Count

18/06/04 13:44:40 INFO SecurityManager: Changing view acls to: gerardn

18/06/04 13:44:40 INFO SecurityManager: Changing modify acls to: gerardn

18/06/04 13:44:40 INFO SecurityManager: Changing view acls groups to:

18/06/04 13:44:41 INFO SecurityManager: Changing modify acls groups to:

18/06/04 13:44:41 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(gerardn); groups with view permissions: Set(); users with modify permissions: Set(gerardn); groups with modify permissions: Set()

18/06/04 13:44:41 INFO Utils: Successfully started service 'sparkDriver' on port 53894.

18/06/04 13:44:41 INFO SparkEnv: Registering MapOutputTracker

18/06/04 13:44:42 INFO SparkEnv: Registering BlockManagerMaster

18/06/04 13:44:42 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

18/06/04 13:44:42 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

18/06/04 13:44:42 INFO DiskBlockManager: Created local directory at C:\Users\gerardn\AppData\Local\Temp\2\blockmgr-b6b20866-ce03-4cb1-bc9c-b1f8e88fc152

18/06/04 13:44:42 INFO MemoryStore: MemoryStore started with capacity 912.3 MB

18/06/04 13:44:42 INFO SparkEnv: Registering OutputCommitCoordinator

18/06/04 13:44:42 INFO Utils: Successfully started service 'SparkUI' on port 4040.

18/06/04 13:44:42 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://10.10.2.16:4040

18/06/04 13:44:42 INFO Executor: Starting executor ID driver on host localhost

18/06/04 13:44:42 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 53901.

18/06/04 13:44:42 INFO NettyBlockTransferService: Server created on 10.10.2.16:53901

18/06/04 13:44:42 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

18/06/04 13:44:42 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 10.10.2.16, 53901, None)

18/06/04 13:44:42 INFO BlockManagerMasterEndpoint: Registering block manager 10.10.2.16:53901 with 912.3 MB RAM, BlockManagerId(driver, 10.10.2.16, 53901, None)

18/06/04 13:44:42 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 10.10.2.16, 53901, None)

18/06/04 13:44:42 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, 10.10.2.16, 53901, None)

18/06/04 13:44:43 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 214.5 KB, free 912.1 MB)

18/06/04 13:44:44 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 20.4 KB, free 912.1 MB)

18/06/04 13:44:44 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 10.10.2.16:53901 (size: 20.4 KB, free: 912.3 MB)

18/06/04 13:44:44 INFO SparkContext: Created broadcast 0 from textFile at GettingStarted.java:24

18/06/04 13:44:44 INFO FileInputFormat: Total input paths to process : 1

18/06/04 13:44:44 INFO SparkContext: Starting job: count at GettingStarted.java:30

18/06/04 13:44:44 INFO DAGScheduler: Registering RDD 3 (mapToPair at GettingStarted.java:27)

18/06/04 13:44:44 INFO DAGScheduler: Got job 0 (count at GettingStarted.java:30) with 1 output partitions

18/06/04 13:44:44 INFO DAGScheduler: Final stage: ResultStage 1 (count at GettingStarted.java:30)

18/06/04 13:44:44 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 0)

18/06/04 13:44:44 INFO DAGScheduler: Missing parents: List(ShuffleMapStage 0)

18/06/04 13:44:44 INFO DAGScheduler: Submitting ShuffleMapStage 0 (MapPartitionsRDD[3] at mapToPair at GettingStarted.java:27), which has no missing parents

18/06/04 13:44:44 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 6.0 KB, free 912.1 MB)

18/06/04 13:44:44 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 3.3 KB, free 912.1 MB)

18/06/04 13:44:44 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on 10.10.2.16:53901 (size: 3.3 KB, free: 912.3 MB)

18/06/04 13:44:44 INFO SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1006

18/06/04 13:44:44 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 0 (MapPartitionsRDD[3] at mapToPair at GettingStarted.java:27) (first 15 tasks are for partitions Vector(0))

18/06/04 13:44:44 INFO TaskSchedulerImpl: Adding task set 0.0 with 1 tasks

18/06/04 13:44:44 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, executor driver, partition 0, PROCESS_LOCAL, 4878 bytes)

18/06/04 13:44:44 INFO Executor: Running task 0.0 in stage 0.0 (TID 0)

18/06/04 13:44:44 INFO HadoopRDD: Input split: file:/C:/Users/gerardn/git/spark-bb/src/main/resources/shakespeare.txt:0+5447172

18/06/04 13:44:46 INFO Executor: Finished task 0.0 in stage 0.0 (TID 0). 1151 bytes result sent to driver

18/06/04 13:44:46 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 2038 ms on localhost (executor driver) (1/1)

18/06/04 13:44:46 INFO DAGScheduler: ShuffleMapStage 0 (mapToPair at GettingStarted.java:27) finished in 2.053 s

18/06/04 13:44:46 INFO DAGScheduler: looking for newly runnable stages

18/06/04 13:44:46 INFO DAGScheduler: running: Set()

18/06/04 13:44:46 INFO DAGScheduler: waiting: Set(ResultStage 1)

18/06/04 13:44:46 INFO DAGScheduler: failed: Set()

18/06/04 13:44:46 INFO DAGScheduler: Submitting ResultStage 1 (ShuffledRDD[4] at reduceByKey at GettingStarted.java:28), which has no missing parents

18/06/04 13:44:46 INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 3.5 KB, free 912.1 MB)

18/06/04 13:44:46 INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 2.1 KB, free 912.1 MB)

18/06/04 13:44:46 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on 10.10.2.16:53901 (size: 2.1 KB, free: 912.3 MB)

18/06/04 13:44:46 INFO SparkContext: Created broadcast 2 from broadcast at DAGScheduler.scala:1006

18/06/04 13:44:46 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 1 (ShuffledRDD[4] at reduceByKey at GettingStarted.java:28) (first 15 tasks are for partitions Vector(0))

18/06/04 13:44:46 INFO TaskSchedulerImpl: Adding task set 1.0 with 1 tasks

18/06/04 13:44:46 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

18/06/04 13:44:46 INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID 1, localhost, executor driver, partition 0, ANY, 4621 bytes)

18/06/04 13:44:46 INFO Executor: Running task 0.0 in stage 1.0 (TID 1)

18/06/04 13:44:46 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks

18/06/04 13:44:46 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 0 ms

18/06/04 13:44:47 INFO Executor: Finished task 0.0 in stage 1.0 (TID 1). 1133 bytes result sent to driver

18/06/04 13:44:47 INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID 1) in 484 ms on localhost (executor driver) (1/1)

18/06/04 13:44:47 INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

18/06/04 13:44:47 INFO DAGScheduler: ResultStage 1 (count at GettingStarted.java:30) finished in 0.500 s

18/06/04 13:44:47 INFO DAGScheduler: Job 0 finished: count at GettingStarted.java:30, took 3.024591 s

Documentation / Reference

- The whole Java and Scala doc where Java class can be found at

- Java Package such as

- …