About

Spark has many logical representation for a relation (table).

- Spark DataSet - Data Frame (a dataset of rows)

- Spark - Resilient Distributed Datasets (RDDs) (Archaic: Previously SchemaRDD (cf. Spark < 1.3)).

This data structure are all:

- distributed

- and present a abstraction for selecting, filtering, aggregating and plotting structured data (cf. R, Pandas) using functional transformations (map, flatMap, filter, etc.)

Articles Related

Type

The Dataset

The Dataset can be considered a combination of DataFrames and RDDs. It provides the typed interface that is available in RDDs while providing a lot of conveniences of DataFrames. It will be the core abstraction going forward.

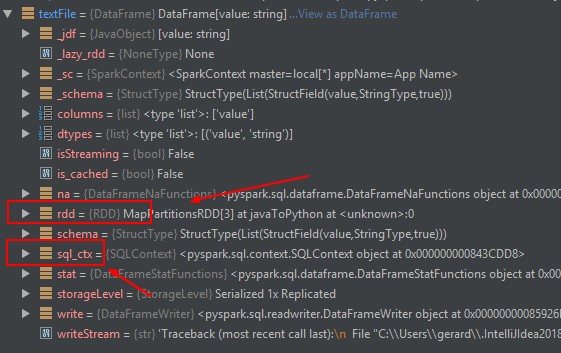

The DataFrame

The DataFrame is collection of distributed Row types. Similar in concept to Python pandas and R DataFrames

The RDD (Resilient Distributed Dataset)

RDD or Resilient Distributed Dataset is the original data structure of Spark.

It's a sequence of data objects that consist of one or more types that are located across a variety of machines in a cluster.

New users should focus on Datasets as those will be supersets of the current RDD functionality.

Data Structure

The below script gives the same functionality and computes an average.

RDD

data = sc.textFile(...).split("\t")

data.map(lambda x: (x[0], [int(x[1]), 1])) \

.reduceByKey(lambda x, y: [x[0] + y[0], x[1] + y[1]]) \

.map(lambda x: [x[0], x[1][0] / x[1][1]]) \

.collect()

Data Frame

- Using DataFrames: Write less code with a dataframe

sqlCtx.table("people") \

.groupBy("name") \

.agg("name", avg("age")) \

.collect()