About

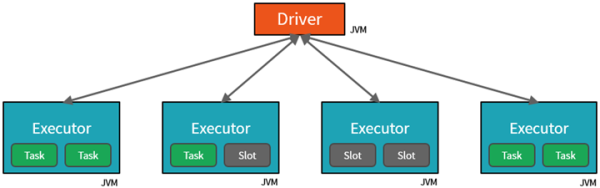

Cores (or slots) are the number of available threads for each executor (Spark daemon also ?)

They are unrelated to physical CPU cores. See below

slots indicate threads available to perform parallel work for Spark. Spark documentation often refers to these threads as cores, which is a confusing term, as the number of slots available on a particular machine does not necessarily have any relationship to the number of physical CPU cores on that machine.

Articles Related

Configuration

Number of core can be configured :

- at a spark JVM daemon level. See:

- or for the whole cluster

- spark.cores.max