About

Lexer are also known as:

- Lexical analyzer

- Lexical Tokenizer

- Lexical Scanner

Example

Consider the following expression:

sum = 3 + 2;

A lexer will tokenized it in the following symbol table:

| Token | |

|---|---|

| Lexeme | Token type |

| sum | Identifier |

| = | Assignment operator |

| 3 | Integer literal |

| + | Addition operator |

| 2 | Integer literal |

| ; | End of statement |

Definition

A lexer defines how the contents of a file is broken into tokens.

A lexer is ultimately implemented as finite automata.

A lexer reads an input character or byte stream (i.e. characters, binary data, etc.), divides it into tokens using:

- patterns (rules) specified in a grammar file or in the code.

- and generates a token stream as output.

This process of transforming an input text into discrete components called tokens is known as:

- lexical analysis,

- lexing

- tokenizing

- or simply scanning.

A Lexer is a stateful stream generator (ie the position in the source file is saved). Every time it is advanced, it returns the next token in the Source. Normally, the final Token emitted by the lexer is a EOF and it will repeatedly return the same EOF token whenever called.

Lexical analysis is the first step of a compiler. In the second step, the tokens can then then processed by a parser.

Lexers are generally quite simple and does nothing with the tokens. Most of the complexity is deferred to the following steps:

- or semantic analysis 1) (seeing if there is a problem, a possible optimization, …)

For example, a typical lexical analyzer recognizes parentheses as tokens, but does nothing to ensure that each “(” is matched with a “)”. This syntax analysis is left to the parser.

Steps

Tokenizing is generally done in a single pass.

- Reads in a input stream of characters from a scanner

- Groups the characters into meaningful sequences called lexemes that adheres to the grammar. Tokens are frequently in the rule defined by regular expressions.

- Categorize each lexeme (sequence of characters) into tokens (symbols of the vocabulary of the language). If the lexer finds an invalid token, it will report an error.

Implementation

Compiler-Compiler

Lexers can be generated by automated tools called compiler-compiler.

They are working:

- from a grammar file that defines the lexer rules

- then generates the lexer as code

Manually

You can also write your own lexer manually.

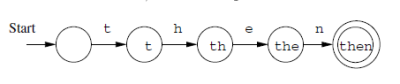

They all use ultimately a finite automaton modeling the recognition of the keyword then

where:

- the state (circle) represents a different position in the word then that has been reached so far that ranges from:

- the empty string (ie nothing)

- to the complete word

Example:

Why not using simply a regular expression (Lexer vs Regexp)

Why not using simply a regular expression

- no lexical mode: The lexical mode (or context) is important when you want your parsing rules to be applied conditionally. The most obvious being for instance a html pre where you don't want any parsing inside.