About

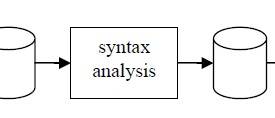

Compiler-compilers splits the work into a lexer and a parser:

- The Lexer reads text data (file, string,…) and divides it into tokens using lexer rule (patterns). It generates as output a list of tokens (also known as a token stream)

- A Parser: It reads the token stream generated by a lexer, and matches phrases defined via the parser rules (token patterns) to build an Abstract Syntax Tree.

- The syntax tree generated will then represent the structure of the program. This tree then serves as an input to other application (code analysis, code translation, …)

Compiler-compilers generates the lexer and parser from a language description file called a grammar

Parsers and lexical analysers are long and complex components. A software engineer writing an efficient lexical analyser or parser directly has to carefully consider the interactions between the rules.

Articles Related

Application

- Language translation: translate one language to another

- Language creation:

Build process

As Compiler-compilers need to generates the lexer and parser, it makes the build process a little bit more complicated:

- First, the compiler-compiler must generate the lexer and parser from the grammar file

- then, you can use them to compile the code

Error handling

The lexical analyser and parser also are responsible for generating error messages, if the input does not conform to the lexical or syntactic rules of the language.

Tools

Antlr, javacc, sablecc, lex are not a parser or a lexical anaylzer but a generator. This means that it outputs lexical analyzers and parser according to a specification that it reads in from a file (the grammar)

Flex

JFlex

https://www.jflex.de/ - JFlex is a lexical analyzer generator (also known as scanner generator) for Java, written in Java.

JFlex lexers are based on deterministic finite automata (DFAs).

JFlex is designed to work together with:

- the LALR parser generator CUP by Scott Hudson,

- and the Java modification of Berkeley Yacc BYacc/J by Bob Jamison.

It can also be used together with other parser generators like ANTLR or as a standalone tool.

Fro JFlex files editing support, see Grammar Kit

REx Parser Generator

Lex / Yacc

wiki/Lex_(software), wiki/Yacc

- http://dinosaur.compilertools.net/ - Yet Another Compiler-Compiler

Installtion - cygwin includes lex and yacc

Yac is used by php. See bnf yacc grammar

ANTLR

See ANTLR

Babelfish

Dead project Babelfish

Chevrotain

Parser Building Toolkit for JavaScript

JavaCC

JavaCC is a tool used in many applications, which is much like antlr, with few features different here and there. However, it just generates Java code.

Used by: BeanShell

Janino

Java : http://janino-compiler.github.io/janino/

used by Calcite (Farrago, Optiq)

SableCC

SableCC is a compiler-compiler tool for the Java environment. It handles LALR(1) grammars (for those who remember their grammar categories). In other words it's a bottom up parser (unlike JavaCC and Antlr which are top-down).

SableCC is a bottom up parser, which takes an unconventional and interesting approach of using object oriented methodology for constructing parsers. This results in easy to maintain code for generated parser. However, there are some performance issues at this point of time. It generates output in both C++ and Java

Unified

unified is an interface for processing text using syntax trees. It’s what powers remark, retext, and rehype, but it also allows for processing between multiple syntaxes.

unified enabled new exciting projects like Gatsby to pull in markdown, MDX to embed JSX, and Prettier to format it. It’s used to check code for Storybook, debugger.html (Mozilla), and opensource.guide (GitHub).