About

An Algorithm is a mathematical procedure for solving a specific kind of problem.

For some data mining functions, you can choose among several algorithms.

Articles Related

List

| Algorithm | Function | Type | Description |

|---|---|---|---|

| Decision Tree (DT) | Classification | supervised | Decision trees extract predictive information in the form of human-understandable rules. The rules are if-then-else expressions; they explain the decisions that lead to the prediction. |

| Generalized Linear Models (GLM) | Classification and Regression | supervised | GLM implements logistic regression for classification of binary targets and linear regression for continuous targets. GLM classification supports confidence bounds for prediction probabilities. GLM regression supports confidence bounds for predictions. |

| Minimum Description Length (MDL) | Attribute Importance | supervised | MDL is an information theoretic model selection principle. MDL assumes that the simplest, most compact representation of data is the best and most probable explanation of the data. |

| Naive Bayes (NB) | Classification | supervised | Naive Bayes makes predictions using Bayes' Theorem, which derives the probability of a prediction from the underlying evidence, as observed in the data. |

| Support Vector Machine (SVM) | Classification and Regression | supervised | Distinct versions of SVM use different kernel functions to handle different types of data sets. Linear and Gaussian (nonlinear) kernels are supported. SVM classification attempts to separate the target classes with the widest possible margin. SVM regression tries to find a continuous function such that the maximum number of data points lie within an epsilon-wide tube around it. |

| Apriori (AP) | Association | Unsupervised | Apriori performs market basket analysis by discovering co-occurring items (frequent itemsets) within a set. Apriori finds rules with support greater than a specified minimum support and confidence greater than a specified minimum confidence. |

| k-Means (KM) | Clustering | Unsupervised | k-Means is a distance-based clustering algorithm that partitions the data into a predetermined number of clusters. Each cluster has a centroid (center of gravity). Cases (individuals within the population) that are in a cluster are close to the centroid. Oracle Data Mining supports an enhanced version of k-Means. It goes beyond the classical implementation by defining a hierarchical parent-child relationship of clusters. |

| Non-Negative Matrix Factorization (NMF) | Feature Extraction | Unsupervised | NMF generates new attributes using linear combinations of the original attributes. The coefficients of the linear combinations are non-negative. During model apply, an NMF model maps the original data into the new set of attributes (features) discovered by the model. |

| One Class Support Vector Machine (One- Class SVM) | Anomaly Detection | Unsupervised | One-class SVM builds a profile of one class and when applied, flags cases that are somehow different from that profile. This allows for the detection of rare cases (such as outliers) that are not necessarily related to each other. |

| Orthogonal Partitioning Clustering (O-Cluster or OC) | Clustering | Unsupervised | O-Cluster creates a hierarchical, grid-based clustering model. The algorithm creates clusters that define dense areas in the attribute space. A sensitivity parameter defines the baseline density level. |

| Maximum Entropy (MaxEnt) | Classification | Supervised |

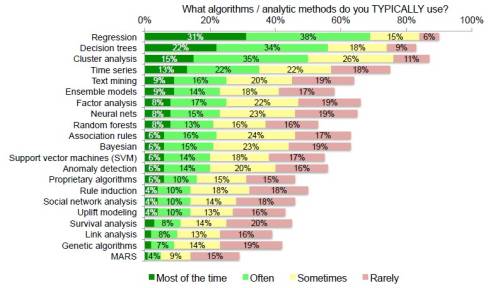

Machine learning techniques:

- and random forests

Group method of data handling (GMDH) is a family of inductive algorithms for computer-based mathematical modeling of multi-parametric datasets that features fully automatic structural and parametric optimization of models.

Comparison

Weka

In the experimenter, the result will show:

- a v that means significantly better

- a * significantly worse

at a significance level (5% and 1% are common). The “null hypothesis” is that two classifiers perform the same.

Dataset (1) trees.J4 | (2) rules (3) rules (4) bayes (5) lazy. (6) funct (7) funct (8) meta.

--------------------------------------------------------------------------------------------------------------

iris (100) 94.73 | 92.53 33.33 * 95.53 95.40 97.07 96.27 95.40

breast-cancer (100) 74.28 | 66.91 * 70.30 72.70 72.85 67.77 * 69.52 * 71.62

german_credit (100) 71.25 | 65.91 * 70.00 75.16 v 71.88 75.24 v 75.09 v 71.27

pima_diabetes (100) 74.49 | 71.52 65.11 * 75.75 70.62 77.47 76.80 74.92

Glass (100) 67.63 | 57.40 * 35.51 * 49.45 * 69.95 62.84 57.36 * 44.89 *

ionosphere (100) 89.74 | 82.28 * 64.10 * 82.17 * 87.10 87.72 88.07 90.89

--------------------------------------------------------------------------------------------------------------

(v/ /*) | (0/2/4) (0/2/4) (1/3/2) (0/6/0) (1/4/1) (1/3/2) (0/5/1)

Key:

(1) trees.J48 '-C 0.25 -M 2' -217733168393644444

(2) rules.OneR '-B 6' -3459427003147861443

(3) rules.ZeroR '' 48055541465867954

(4) bayes.NaiveBayes '' 5995231201785697655

(5) lazy.IBk '-K 1 -W 0 -A \"weka.core.neighboursearch.LinearNNSearch -A \\\"weka.core.EuclideanDistance -R first-last\\\"\"' -3080186098777067172

(6) functions.Logistic '-R 1.0E-8 -M -1' 3932117032546553727

(7) functions.SMO '-C 1.0 -L 0.001 -P 1.0E-12 -N 0 -V -1 -W 1 -K \"functions.supportVector.PolyKernel -C 250007 -E 1.0\"' -6585883636378691736

(8) meta.AdaBoostM1 '-P 100 -S 1 -I 10 -W trees.DecisionStump' -7378107808933117974